Hi, I’m higashi.

This time, I introduce meaning of parameter inside LSTM model constructed by tensoflow-Keras.

By reading this page, you will be able to easily understand inside structure of LSTM model.

OK, let’s get started!!

About Weights and Bias Inside LSTM Model

At first, I explain weights and bias inside LSTM model is where and how used.

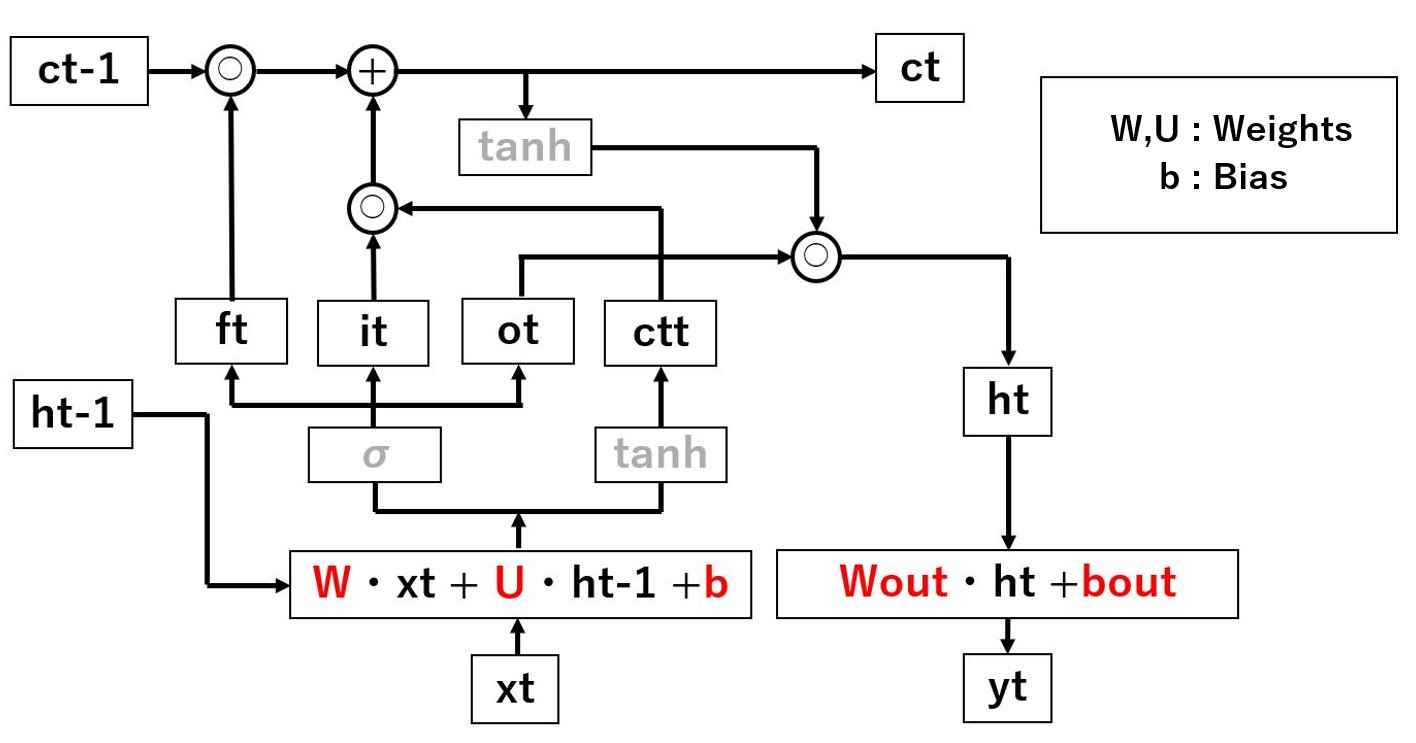

The below illustration is structure of LSTM model.

Red color character is weights and bias.

You can confirm there are five species parameters.

(W, U, b, Wout, bout)

Please remember this number “five”.

Confirm Inside Parameter of LSTM Model

OK next, let’s confirm the parameter of LSTM Model.

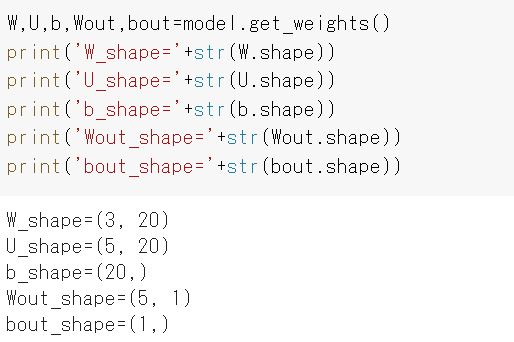

In case of Tensorflow-Keras, you can confirm it by conduct “model.get_weights()” method.

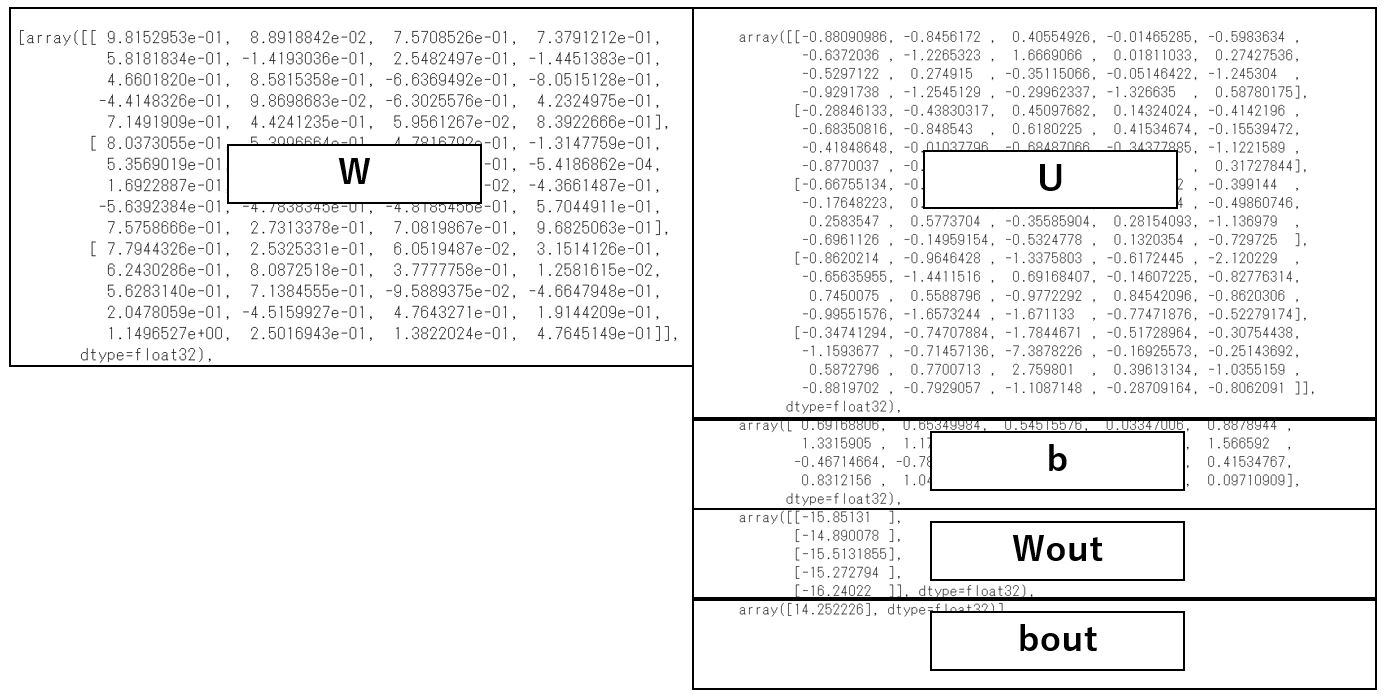

Below image is the result of it.

I think you can confirm that there are five species parameters.

By the way, this LSTM model’s input dimension is three, hidden layer dimension is five, output dimension is one.

Shape of these parameters is below respectively.

As I mentioned before, this LSTM model’s input dimension is 3, hidden layer dimension is 5, output dimension is 1.

I think you can confirm there are these number in each shape.

But I think you will confuse about the number 20.

What is this?

I explain at next paragraph.

About the Shape of LSTM Parameters

OK next, I explain about the shape of LSTM parameters.

The LSTM model constructed by tensorflow-Keras, assuming “xu” as input dimension, and “hu” as hidden layer dimension, and “yu” as output dimension, the weights and bias will be below shapes.

W_shape=(xu, hu×4)

U_shape=(hu, hu×4)

b_shape=(hu×4,)

Wout_shape=(hu, yu)

bout_shape=(yu,)

What is this ×4 in W, U, b?

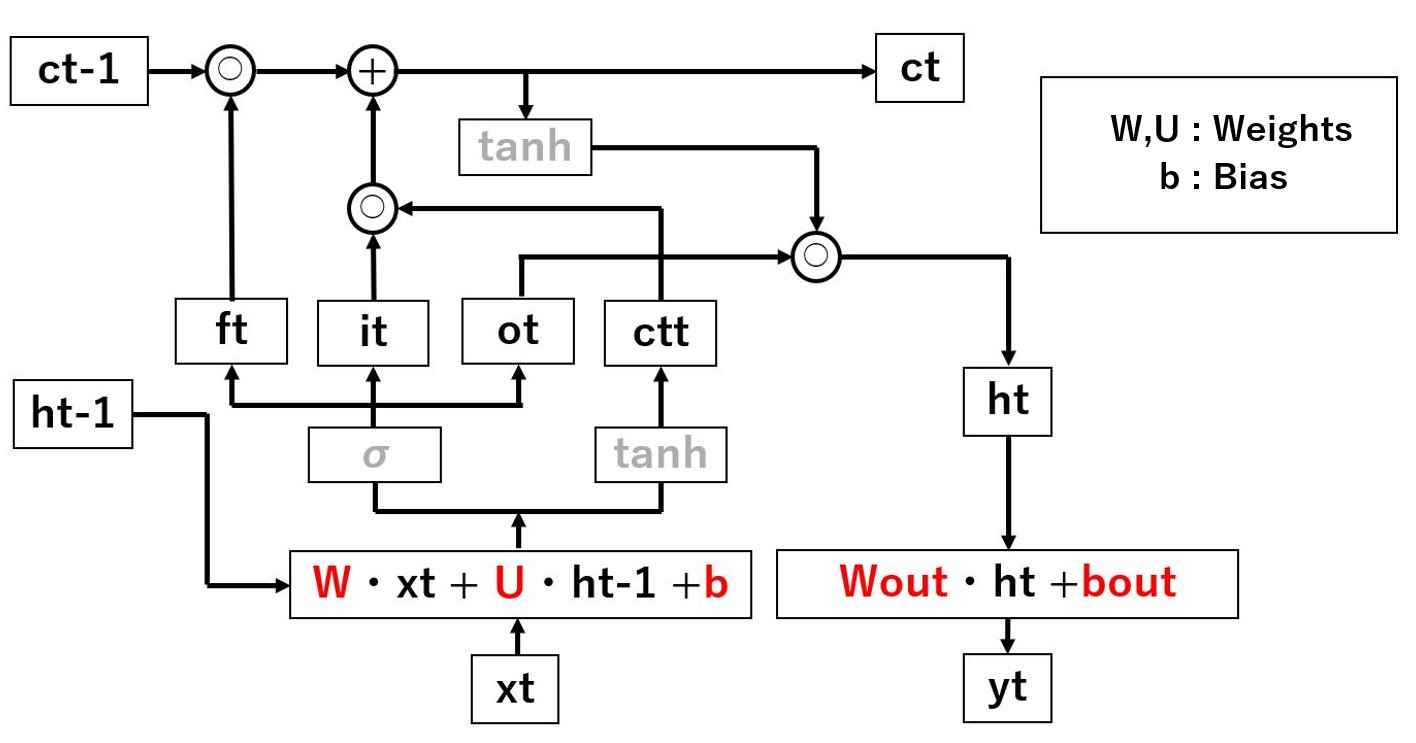

Below is LSTM Model structure again.

As this image shows, 4 vectors (it, ft, ot, ctt) is made by (W, U, b).

The “×4” in the shapes exists for making these 4 vectors.

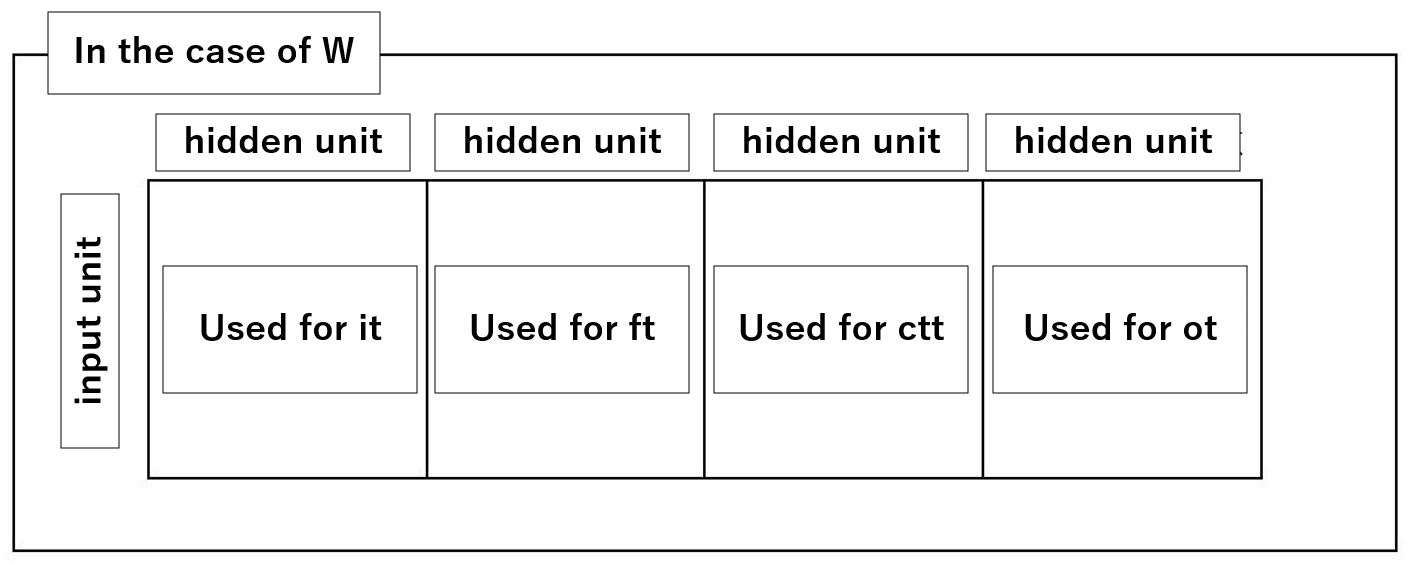

Below is illustration of W shape.

U and b is also almost same.

LSTM Calculation Demonstration by Using Parameters

OK, let’s conduct LSTM calculation by using parameters obtained until above.

Learned Model : model

Input Layer Unit : xdim=3

Hidden Layer Unit : hl_unit=5

Look Back Number : look_back=4

The “Xt” in below code is sample input data that has the shape of (look_back, xdim) .

#definition of each unit

xdim=3

hl_unit=5

look_back=4

#get weights and bias

W,U,b,Wout,bout=model.get_weights()

Wi=W[:,0:hl_unit]

Wf=W[:,hl_unit:2*hl_unit]

Wc=W[:,2*hl_unit:3*hl_unit]

Wo=W[:,3*hl_unit:]

Ui=U[:,0:hl_unit]

Uf=U[:,hl_unit:2*hl_unit]

Uc=U[:,2*hl_unit:3*hl_unit]

Uo=U[:,3*hl_unit:]

bi=b[0:hl_unit]

bf=b[hl_unit:2*hl_unit]

bc=b[2*hl_unit:3*hl_unit]

bo=b[3*hl_unit:]

#difinition of sigmoid function

def sigmoid(x):

return(1.0/(1.0+np.exp(-x)))

#LSTM calculation

c=np.zeros(hl_unit)

h=np.zeros(hl_unit)

for i in range(look_back):

x=Xt[i]

it=sigmoid(np.dot(x,Wi)+np.dot(h,Ui)+bi)

ft=sigmoid(np.dot(x,Wf)+np.dot(h,Uf)+bf)

ctt=np.tanh(np.dot(x,Wc)+np.dot(h,Uc)+bc)

ot=sigmoid(np.dot(x,Wo)+np.dot(h,Uo)+bo)

c=ft*c+it*ctt

h=np.tanh(c)*ot

Yt=np.dot(h,Wout)+bout

I think you can confirm below calculation steps.

step1. Get Weight and Bias

step2. Split Weights and Bias to 4 Vectors

step3. Calculation LSTM By Using Above 4 Vectors

The Result (Yt) will match the result of “model.predict(Xt)” by tensorflow-Keras.

Please conduct and confirm it.

That’s all. Thank you!!

コメント